The increasing involvement of AI in the design process has generated quite a lot of misunderstanding, fear, and fantasies. In your opinion, how can it serve architecture?

To demystify and perhaps better understand the term AI, one could replace “artificial intelligence” with “statistical learning.” There are other versions of AI, not everything is statistical learning, but this is the version that is most relevant to us as architects. The particularity of statistical learning is that involves a shift away from the world of description and toward one of observation. Let me explain what that means: with the arrival of machine learning and AI, rather than trying to describe and explain a phenomenon by making it “mathematical” through the use of formulas—what is called “describing”—scientific analysis can now be based upon a science of observation, of statistics. The idea is to repeat millions of observations of similar phenomena in order to extract the main characteristics. Rather than seeking to understand everything, to model everything perfectly, we can rely on “approximations” derived from observation, as they offer an alternative method of approaching or resolving phenomena that are difficult to describe explicitly.

These techniques are precious, as it is often quite difficult to describe everything explicitly; not to mention that there are a great number of implicit criteria in architecture. Parametricism is a concrete example: coming from the domain of descriptive sciences, it only provides access to explicit criteria that can be encoded into its procedures. But implicit criteria, such as cultural or contextual data, are difficult to encode. This is why the buildings of Zaha Hadid, to take an emblematic example, could be built in an almost identical manner in any country as no implicit cultural data are taken into account. Only local, inherently geometrical data, such as topography, are integrated; and the form can then be parametrically adapted to the physical context, but this is the limit of the exercise. Conversely, statistical modeling attempts to encode some of the implicit parameters of architecture.

Rather than imagining a total disruption, it seems to me to be necessary to place the arrival of AI in architecture within a historical perspective. The world of architecture has undergone four major technological revolutions: modularity, computer-aided design (CAD), parametricism, and AI. These four movements highlight a progressive systematization of architectural methods, accompanied by a consolidation of the use of software tools. Modularity consists simply of relying on a modular model so as to anticipate some of its complexity. As for CAD, it assists the architect and their design. Finally, parametricism allows the deployment of major formal principles within architecture in a systematic manner. AI follows in the footsteps of these movements, and its novelty resides in its method and the implied passage from explicit description to statistical modeling, which in the end can have three main consequences on how architects work.

The first major change is the relationship with the tool. With parametricism, the process was binary: the architect would configure a model that would itself generate models. This generation represented the final step in the process; the resulting object would become the project, with a very relative possibility for editing once past the step of generation. AI can take a different, sequential approach. By working with the architect, the machine helps by suggesting possibilities at different points in the project. Specialized for certain tasks or certain architectural scales, models can be called upon occasionally, before being erased to make way for the architectural gesture. This process of assisted iterative design allows the project to be refined by following a sequence of the “edit-suggestion-re-edit” kind, rather than a “configuration-generation” type as has been the case with parametricism. I have been able to illustrate this reality through my work and have also experimented with it in a concrete manner in my practice in order to verify its validity. It is quite clear that AI represents, at least in this respect, a technological and conceptual advantage when compared with parametricism.

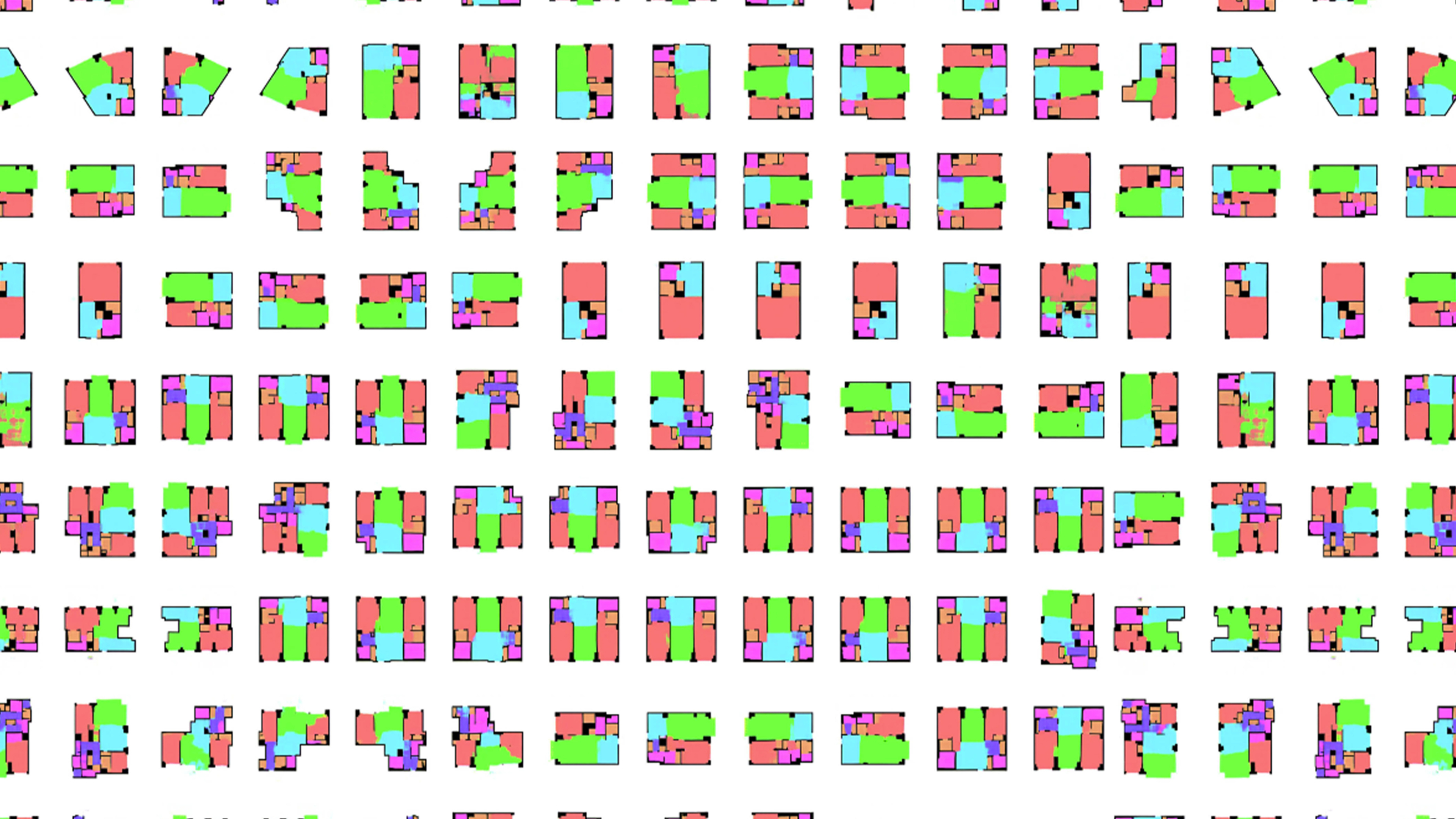

The second major contribution of AI reflects a completely different reality: the principle of polysemy. This concept, borrowed from semantics, considers that one word can have many meanings. It is the same for architecture: for a given architectural principle there are a myriad of corresponding forms. It is for example always interesting to see how many forms and typologies can respond to the apparently simple concept of an “apartment.” This is because the uses, the site, and the culture into which the architecture is inserted conditions the architectural gesture in an infinite number of very specific forms. It is this relationship of one to many that AI can deal with through what we call “multimodality”: an AI model can, for an “input” value, offer a whole range of diverse options, while weighing the relative influence of different factors on each solution. The wealth and variety of the options generated can be a source of inspiration for the architect: it is also reminiscent of a pre-existing practice in architecture, that of the mass production of conceptual models that agencies like OMA specialize in. AI allows us to enhance this methodology by deploying it on a much greater scale, all while broadening the range and variety of the achievable options. To conclude on this point, one must remember that “to create is to limit”: the infinite fields of options opened up by AI are only viable and desirable in terms of the architect’s expertise, as the only one able to select the adapted solutions in order to limit the range of possibilities so that the architecture can ultimately be built.

The third major change linked to AI, and to my mind the most interesting, is to emphasize the notion of pertinence rather than that of performance. With parametricism, we optimize a set of parameters to target performance, whether in terms of energy, structure, or something else. AI seeks to achieve performance while completing it with implicit criteria. Simply put, pertinence is performance enhanced by cultural and contextual dimensions. By taking into account specific data connected to the place and culture into which the architecture is inserted, AI can propose options that are closer to the reality on the ground. Design is then not only a formal exercise; it can take into account the relationship with a context in a particularly intimate and specific way. Though this consideration obviously existed before the arrival of AI, this latter can provide a particularly interesting methodology and a set of tools for dealing with a contextual approach in its most varied dimensions. In this, AI in architecture can position itself as a model that runs counter to technologies that aim for pure automation.

Assistance, polysemy, and pertinence are for me the three major contributions that AI can make to architecture.

Statistical learning implies “training.” How is this process carried out? What roles do context and the “teacher” play in this process?

Training is done through the use of a large number of images that are fed to the AI, teaching it to recognize them. Take the example of notions of public and private spaces, that are not solely utilitarian but also connected to the “message” conveyed by the architecture. These notions are difficult to code explicitly as it is very difficult to establish a mathematical formula that covers everything that separates them. As architects however, we are capable of identifying these types of spaces on a plan quite easily, and this allows us to name them and to train the machine to recognize them. During my thesis, for example,

I trained three models of AI with this method so that they were able to generate the footprint of a building, the layout of the rooms or the organization of furniture in an apartment.

For me, one of the most fascinating aspects of AI is that it can reverse the idea of a technology that “uproots.” With parametricism, architects all develop the same forms without taking local or cultural realities into account. This was indeed the “international 2.0” style that Schumacher wanted. Through statistics, AI learns contextual data and can become familiar with local realities. Depending on the subject which is used to train it, a model of a Chinese or European city for example, the results are very different. It is actually possible to train a model to draft plans based on generic data, and then to make it specific to a given cultural context through the use of transfer learning. To give you an example, if we wanted to design a chair but only had a few hundred images of the object—which is insufficient—we could start with an AI that has been trained to draw faces based on millions of images of other faces. This seems counterintuitive, but in fact all we need to do is to refine its learning to allow it to specialize, based on a hundred or so images of chairs, which would only require a couple of hours of work. This allows a completely different project to be developed with an increased economy of means, based on the model’s core competency which is the result of a long and expensive process requiring a massive amount of data.

One of the issues with “learning” in AI is deciding whether or not this training phase, during which the model learns to emulate a task or an activity, is supervised. In other words, to chose to what extent we explicitly indicate to the machine where success lies, which direction it should take in order to succeed in its training, or whether to allow it to decide in a slightly more autonomous manner which characteristics to emulate, in a non-supervisory approach. In a scientific context, if this works, it is almost possible to only be interested in the results. Chris Anderson, the former editor-in-chief of the magazine Wired actually wrote an article1 describing the way that big data and AI seem to him to have rendered scientific method obsolete. In other words, instead of understanding how these models manage to simulate certain phenomena, he invites us to “accept” their functioning in light of their efficiency, which signals to some extent the end of scientific theory. This may seem frightening, but from a pragmatic point of view it works, these models provide results. The problem remains that in architecture, meaning is our expertise, we cannot let go of meaning in exchange for pure quantitative results. In this debate between supervision and non-supervision in AI learning, I personally think that it is essential for us to supervise in order to understand the direction taken by the machine.

Architecture has a bias by definition, without which it would be reduced to a simple technical field. The issue then lies in this supervision, in the way that we manage the training set—the database integrated by the models—so as to insert our own expression, our own bias. With no supervision, the machine can take the path of performance in light of the established criteria, but it is impossible to understand how and why. Because the singular and personal expression of an architecture is crucial; this is indeed why the public continues to call on architects. It is obviously desirable that personal style does not disappear because of AI. In my thesis, I was able to study AI’s capacity to take into account an architectural style by using Baroque and Roman plans to train it. It is amazing to see AI emulate certain stylistic principles, all while allowing the hand of the creator to reuse them in the service of new creations.

AI doesn’t seem to you to signal the end of architecture or the end of style in architecture then?

No, not at all. Fundamentally, the way that the architect manages the AI’s training becomes the expression of their style and way of working. All of these notions that make up a large part of architecture and that we are afraid of losing with technology, such as sensitivity to context, don’t seem to disappear with AI in my opinion, but are on the contrary reborn in a new light. Nevertheless, for this to occur the architect must be at the origin of those models, the way that they are managed beforehand, and must personally train them. Unlike parametricism, in its strictly technological reality AI has no reason to have a particular style or to produce a specifically recognizable architecture as such. The specific identity of an AI is based on statistics, and so its learning is based on the past, on what exists, in other words the style that we have chosen to present to it, biases that we have indicated to it. The machine is not autonomous, and never will be when it comes to architecture. From this point of view, architects have the choice between making AI a paralyzing and terrifying myth, seeing it as a threat to the profession, or embracing the tool and realizing the technological opportunity that it represents.

Without necessarily saying that every architect must become an expert in AI, it seems crucial to me to be educated in certain simple notions in order to interact intelligently with other players in the field. It has become necessary to have a minimum understanding of the main issues, the costs and the risks, but also the potential added value of AI. We are speaking here of a tool that can assist architecture by making certain fastidious tasks less cumbersome, all while allowing the expressive and rich part of the job to be enhanced. In design however, it can lead to a form of standardization if it is used in its most basic technological reality, due to the tendency of statistics to smooth out any differences. But AI does not have the specific vocation to emulate all of our faculties, it should help us while allowing human initiative to deal with the areas of rupture and divergence that are inherent to creativity. From this point of view, there is a true synergy of intelligences. In its best definition, architectural style remains a divergence, and not a rational convergence, I am convinced that AI can help us to highlight the quality and terms of this difference.